Our Workflow

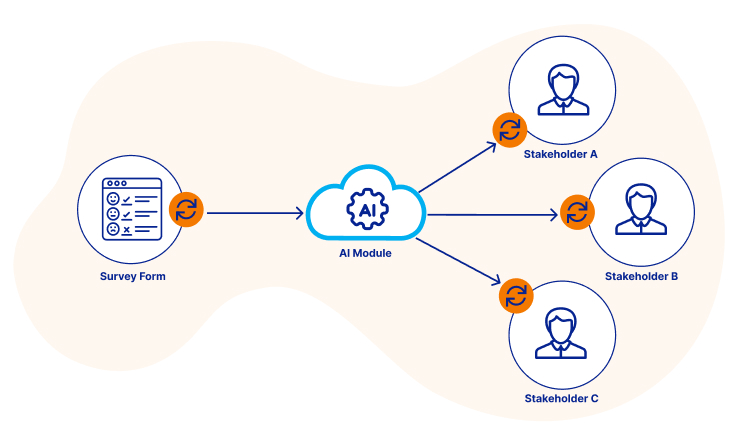

Our Automated Workflow for the NLP Solution

- Data Input: Raw survey responses are uploaded in the client's familiar Excel format

- AI Analysis: Our NLP model reads each response and automatically identifies which topics and themes are mentioned

- Stakeholder Mapping: The system assigns each classified response to the appropriate team members who need to see that specific feedback

Free Sample

Free Sample