Garbage in, garbage out (GIGO) is a popular concept in computer programming that also applies to machine learning and artificial intelligence models. If your AI/ML model is fed inaccurate or irrelevant training data, the results will be unreliable. To train AI models for better understanding and interpretation of real-world scenarios, it is crucial to provide them with a large amount of high-quality training datasets. Annotating vast datasets requires highly skilled annotators in large numbers. For businesses lacking experienced annotators in-house or facing budget constraints, outsourcing data labeling services can be a strategic move.

In this blog post, we’ll understand the role of accurately labeled datasets in machine learning model training and how data annotation services can be beneficial in achieving this.

Table of content

Why Do AI Models Require Accurately Labeled Training Datasets?

Whether building for object detection, natural language processing, or any other specific task, AI models start as blank slates. They need instructions (in the form of training data) to learn how to process and interpret information. Discrepancies in machine learning training data lead to inaccurate predictions, as the model fails to understand the context and meaning of the data it is working on.

Accurately labeled datasets provide ground truth for machine learning models. It ensures that the model’s outputs align with the actual attributes of the data, resulting in more trustworthy AI systems.

Let’s understand this with the help of an example:

In healthcare, accurately labeled datasets are critical for automated diagnostic tools used for medical imaging. Training a machine learning model to detect tumors in MRI scans requires numerous correctly annotated images. Incorrect training data can cause the model to misidentify healthy tissue as malignant or miss actual tumors, leading to severe consequences for patient health and treatment plans. Thus, accurately labeled datasets are required to ensure that the model can differentiate between subtle variations in images, improving diagnostic accuracy and supporting better clinical decisions.

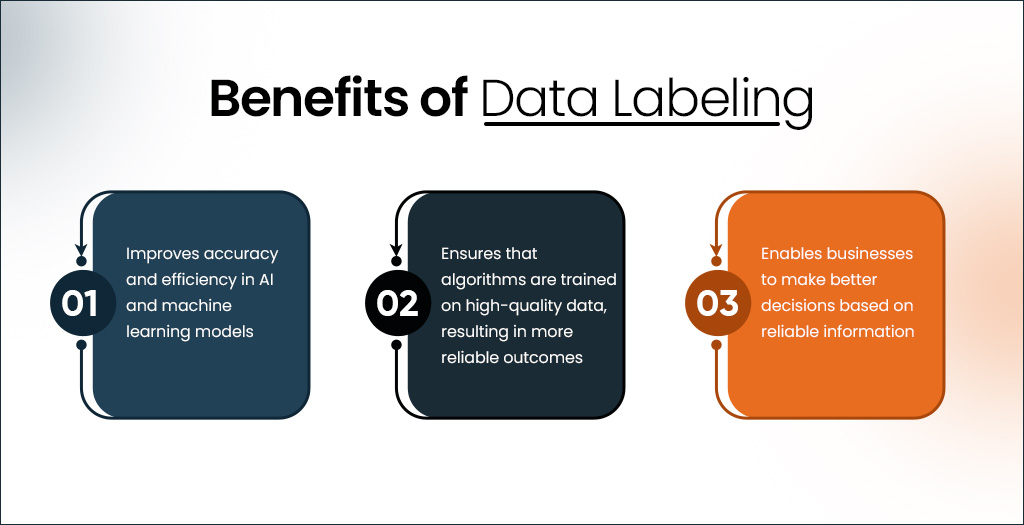

Benefits of Data Labeling

Popular Ways of Acquiring Accurately Labeled Datasets to Train AI Models for Better Outcomes

High-quality training data is the cornerstone of any successful AI/ML model. Several effective methods for acquiring this data exist, each with its own set of considerations.

- Setting Up In-House Data Annotation Teams

For specialized fields or complex tasks, businesses prefer to employ domain experts in-house for data labeling. This is common in healthcare, finance, and scientific research domains, where subject matter expertise is required.

Key considerations involved with this approach:

- High costs

Setting up in-house teams requires significant investment. Annotators need to be trained to understand project requirements and annotation guidelines, which can be time-consuming and expensive for businesses.

- Ongoing management

Maintaining high-quality and consistent data labeling demands continuous oversight. Businesses need to manage the team regularly to ensure standards are met, which adds to operational costs.

- Scalability issues

As the volume of data grows, scaling up the annotation process can be difficult. Expanding the team and infrastructure to handle larger datasets increases costs and complexity.

- Crowdsourcing

Businesses can also use crowdsourcing platforms like Amazon Mechanical Turk, Appen, or Clickworker to distribute data labeling tasks to a large pool of annotators, saving time and resources. This approach is popular for tasks like image tagging, sentiment analysis, and data categorization.

Key considerations involved with this approach:

- Inconsistent quality

Since contributors on crowdsourcing platforms vary in skill and experience, the quality of labeled data can be inconsistent. Some annotations may be highly accurate, while others might be incorrect or incomplete.

- Training and instructions

Contributors need clear and precise instructions to perform their tasks correctly. Effective training materials and guidelines are essential. However, providing this to a diverse, dispersed workforce can be challenging.

- Potential bias

Crowdsourced contributors may introduce biases based on their personal backgrounds or interpretations. Implementing quality checks to minimize bias is crucial but time-consuming.

- Data security and privacy

Opting for crowdsourcing platforms may involve sharing sensitive data with numerous contributors, raising concerns about data security and privacy.

- Automated Labeling Using Pre-Trained Models

Rule-based automated data annotation platforms (Labellerr, Scale AI, SuperAnnotate) can be used to label large datasets quickly.

Key considerations involved with this approach:

- Initial setup complexity

Setting up automated rule-based data labeling tools requires a thorough understanding of the machine learning training data and the specific rules needed for accurate annotation. This initial configuration can be complex and time-consuming.

- Integration challenges

Integrating automated annotation tools with existing systems and workflows can be difficult, especially if the tools are not compatible with the current technology stack.

- Continuous maintenance

To remain effective, rule-based data annotation tools need regular updates and maintenance. As machine learning training data evolves, the rules and algorithms must be adjusted, requiring ongoing technical expertise and resources.

- Active Learning

It is an iterative approach where machine learning algorithms are used to identify the most important data to be labeled on priority by human annotators, followed by non-essential data points being annotated. By focusing on the most informative data points, this approach optimizes the data labeling process and can be useful when there is a large amount of data available for annotation.

Key considerations involved with this approach:

- Requires human intervention

Active learning requires significant human intervention. Experts must label the selected data points accurately, and their involvement must be ongoing to ensure the process continues to yield high-quality data.

- Complex workflow management

Managing the workflow between the model’s selection process and human annotators is crucial for the method’s success but can be complicated.

- Scalability issues

As the volume of data increases, more iterations are needed, leading to increased demands on both human and computational resources.

- Using Publicly Available Datasets

Existing training datasets from academic, governmental, or open-source repositories can be utilized to train AI/ML models. Leveraging such datasets can significantly reduce the time and effort required to gather and preprocess data, enabling a faster model development process.

Key considerations involved with this approach:

- Outdated information

Publicly available training datasets may not be regularly updated, leading to outdated information. Using such data can cause models to make decisions based on obsolete or irrelevant data, affecting their effectiveness.

- Legal and ethical concerns

There could be legal or ethical issues related to the use of publicly available data, such as privacy concerns or licensing restrictions. It’s essential to ensure compliance with all relevant regulations and ethical standards.

- Relevance

Publicly available training datasets might not align perfectly with the specific requirements of your project. They could lack the necessary detail or focus needed for your particular use case, leading to suboptimal model performance.

How Can Data Labeling Services be Helpful in Solving These Challenges?

Lack of accuracy, bias in training data, scalability issues, and data security and privacy concerns are common challenges in data labeling and acquiring pre-labeled training data. Outsourcing data labeling to a reliable third-party provider can effectively address these challenges. Here’s how partnering with a reputable data annotation company can benefit your business.

- Quality Assurance and Consistency

Several data labeling companies utilize a human-in-the-loop approach combined with multi-level quality assurance processes to ensure consistency and accuracy in annotated datasets. Automated data annotation tools generate initial outputs, which are then validated by subject matter experts to reduce errors and improve the quality of your machine learning training data.

- Subject Matter Expertise

Data labeling service providers often have access to domain experts in various fields, such as healthcare, legal, and scientific research. By leveraging their subject matter expertise and adhering to the detailed annotation guidelines, these annotators can reduce bias in training datasets and provide high-quality, contextually accurate labels.

- Compliance and Security

Data annotation companies often hold certifications like ISO for data security and adhere to strict compliance standards. They ensure that sensitive data is handled ethically and legally, complying with regulations such as GDPR and HIPAA to protect sensitive information and maintain data privacy.

- Scalability and Efficiency

With scalable infrastructure and large teams, data labeling service providers can efficiently handle and label large volumes of datasets. They can scale their workforce according to your business needs, ensuring projects are completed within the desired turnaround times.

- Cost-Effectiveness

Outsourcing data labeling services helps businesses save significant investments in infrastructure and employee training. Many service providers offer flexible engagement models, allowing businesses to partner with them according to their specific project needs.

- Advanced Tools and Technology

Data labeling companies have access to prominently used annotation tools. By leveraging advanced data annotation techniques and implementing automated checks, service providers can generate high-quality training datasets in less time.

Final Thoughts

In the rapidly evolving world of AI and machine learning, the importance of high-quality, accurately labeled training datasets cannot be overstated. For acquiring annotated datasets at a large scale to train AI models and overcome the associated challenges, leveraging data labeling services can be a strategic move. From ensuring accuracy and reducing bias to maintaining data security and scalability, data labeling service providers can help you propel your AI initiatives forward. By providing high-quality annotated datasets, they ensure that machine learning models are trained with the precision and depth needed to excel in real-world applications. As AI continues to transform industries, investing in high-quality data labeling will be a key differentiator in building cutting-edge, reliable, and impactful AI solutions.