Our Solution

Accurate Aerial Video Labeling through Manual Frame Adjustment and Bounding Box Annotation

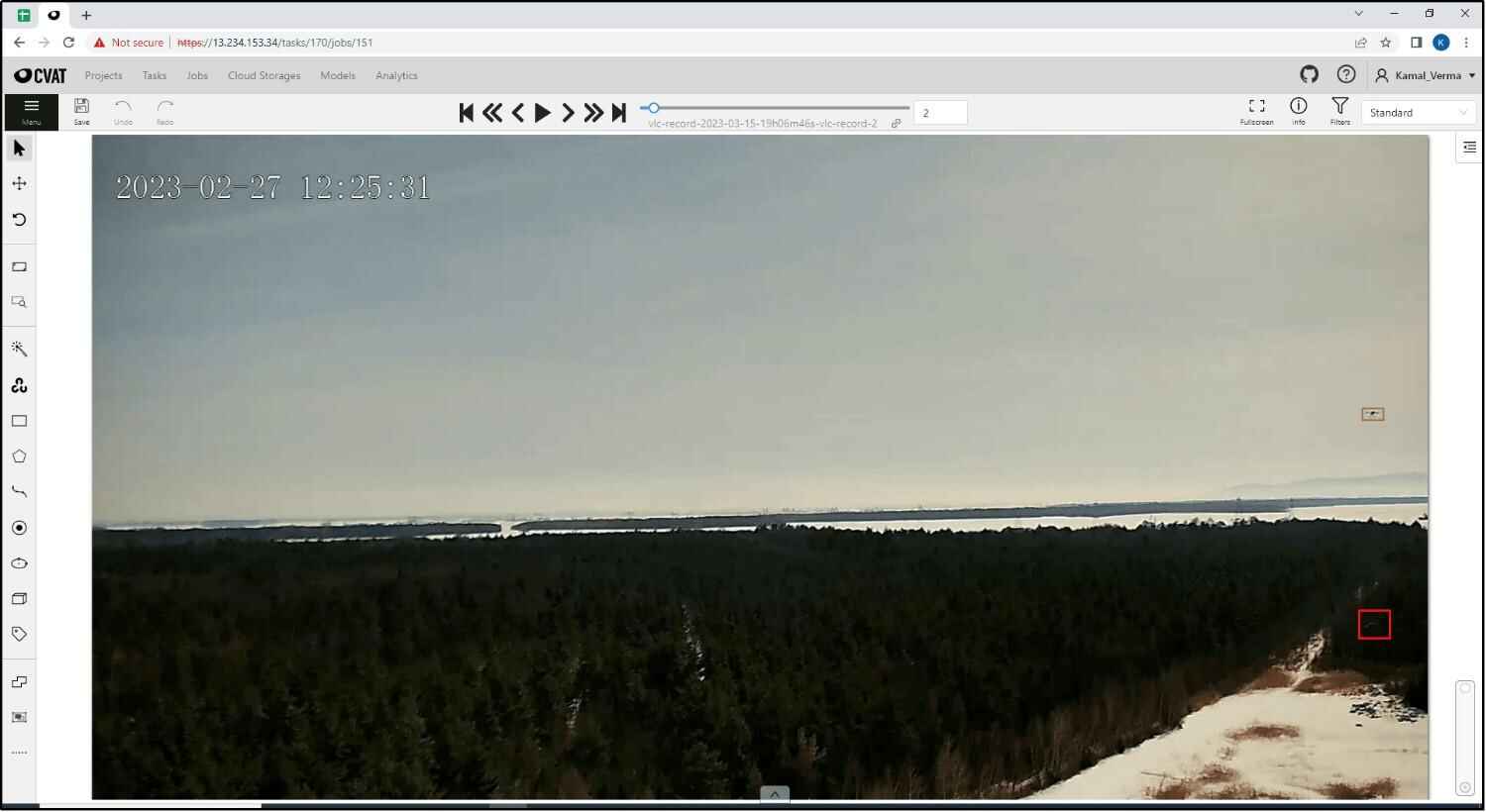

To overcome these challenges, we deployed a dedicated team of 20 data annotators, specializing in labeling aerial footage using the client-specified annotation tool - CVAT. Our approach involved:

After

Before

Enhancing Frame Opacity

For infrared footage, our team adjusted the opacity of frames to improve visibility for accurate image labeling.

Manual Frame Adjustments

To compensate for unpredictable drone movements, our annotators carefully analyzed each frame and made manual adjustments by drawing bounding boxes around the drones. Our team also assigned unique identifiers for accurate tracking of individual drones consistently across frames.

Collaborative Feedback Loop

We maintained an iterative feedback cycle with the client, allowing for real-time adjustments and refinements based on their evolving needs and insights.

Quality Control

We established a multi-level QA process to ensure annotation accuracy and consistency. The quality control process involved:

- Initial Review: Each annotated frame was initially reviewed by senior annotators to verify the accuracy of bounding boxes and identifiers.

- Secondary Review: A secondary QA team conducted random checks on annotated frames to identify and rectify any inconsistencies.

- Final Approval: Project managers conducted a final review to ensure that all annotations met the client's specifications and quality standards.

Free Sample

Free Sample